Practical Garbage Collection, part 1 – Introduction

Posted: December 26, 2011 Filed under: Uncategorized | Tags: gc, jvm, practicalgc 46 CommentsIntroduction

This is the first part of a series of blog posts I intend to write, whose aim will be to explain how garbage collection works in the real world (in particular, with the JVM). I will cover some theory that I believe is necessary to understand garbage collection enough for practical purposes, but will keep it to a minimum. The motivation is that garbage collection related questions keeps coming up in variety of circumstances, including (for example) on the Cassandra mailing list. The problem when trying to help is that explaining the nuances of garbage collection is too much of an effort to do ad-hoc in a mailing list reply tailored to that specific situation, and you rarely have enough information about the situation to tell someone what their particular problem is caused by.

I hope that this guide will be something I can point to in answering these questions. I hope that it will be detailed enough to be useful, yet easy to digest and non-academic enough for a broad audience.

I very much appreciate any feedback on what I need to clarify, improve, rip out completely, etc.

Much of the information here is not specific to Java. However, in order to avoid constantly invoking generic and abstract terminology, I am going to speak in concrete terms of the Hotspot JVM wherever possible.

Why should anyone have to care about the garbage collector?

That is a good question. The perfect garbage collector would do its job without a human ever noticing that it exists. Unfortunately, there exists no known perfect (whatever perfection means) garbage collection algorithm. Further, the selection of garbage collectors practically available to most people is additionally limited to a subset of garbage collection algorithms that are in fact implemented. (Similarly, malloc is not perfect either and has its issues, with multiple implementations available with different characteristics. However, this post is not trying to contrast automatic and explicit memory management, although that is an interesting topic.)

The reality is that, as with many technical problems, there are some trade-offs involved. As a rule of thumb, if you’re using the freely available Hotspot based JVM:s (Oracle/Sun, OpenJDK), you mostly notice the garbage collector if you care about latency. If you do not, chances are the garbage collector will not be a bother – other than possibly to select a maximum heap size different from the default.

By latency, in the context of garbage collection, I mean pause times. The garbage collector needs to pause the application sometimes in order to do some of its work; this is often referred to as a stop-the-world pause (the “world” being the observable universe from the perspective of the Java application, or mutator in GC speak (because it is mutating the heap while the garbage collector is trying to collect it). It is important to note that while all practically available garbage collectors impose stop-the-world pauses on the application, the frequency and duration of these pauses vary greatly with the choice of garbage collector, garbage collector settings, and application behavior.

As we shall see, there exists garbage collection algorithms that attempt to avoid the need to ever collect the entire heap in a stop-the-world pause. The reason this is an important property is that if at any point (even if infrequent), you stop the application for a complete collection of the heap, the pause times suffered by the application scale proportionally to the heap size. This is typically the main thing you want to avoid when you care about latency. There are other concerns as well, but this is usually tbe big one.

Tracing vs. reference counting

You may have heard of reference counting being used (for example, cPython uses a reference counting scheme for most of it’s garbage collection work). I am not going to talk much about it because it is not relevant to JVM:s, except to say two things:

- One property that reference counting garbage collection has is that an object will be known to be unreachable immediately at the point where the last reference is removed.

- Reference counting will not detect as unreachable cyclic data structures, and has some other problems that cause it to not be the end-all be-all garbage collection choice.

The JVM instead uses what is known as a tracing garbage collector. It is called tracing because, at least at an abstract level, the process of identifying garbage involves taking the root set (things like your local variables on your stack or global variables) and tracing a path from those objects to all objects that are directly or indirectly reachable from said root set. Once all reachable (live) objects have been identified, the objects eligible for being freed by the garbage collector have been identified by a proces of elimination.

Basic stop-the-world, mark, sweep, resume

A very simple tracing garbage collector works using the following process:

- Pause the application completely.

- Mark all objects that are reachable (from the root set, see above) by tracing the object graph (i.e., following references recursively).

- Free all objects that were not reachable.

- Resume the application.

In a single-threaded world, this is pretty easy to imagine: The call that is responsible for allocating a new object will either return the new object immediately, or, if the heap is full, initiate the above process to free up space, followed by completing the allocation and returning the object.

None of the JVM garbage collectors work like this. However, it is good to understand this basic form of a garbage collector, as the available garbage collectors are essentially optimizations of the above process.

The two main reasons why the JVM does not implement garbage collection like this are:

- Every single garbage collection pause will be long enough to collect the entire heap; in other words, it has very poor latency.

- For almost all real-world applications, it is by far not the most efficient way to perform garbage collection (it has a high CPU overhead).

Compacting vs. non-compacting garbage collection

An important distinction between garbage collectors is whether or not they are compacting. Compacting refers to moving objects around (in memory) to as to collect them in one dense region of memory, instead of being spread out sparsely over a larger region.

Real-world analogy: Consider a room full of things on the floor in random places. Taking all these things and stuffing them tightly in a corner is essentially compacting them; freeing up floor space. Another way to remember what compaction is, is to envision one of those machines that take something like a car and compact it together into a block of metal, thus taking less space than the original car by eliminating all the space occupied by air (but as someone has pointed out, while the car id destroyed, objects on the heap are not!).

By contrast a non-compacting collector never moves objects around. Once an object has been allocated in a particular location in memory, it remains there forever or until it is freed.

There are some interesting properties of both:

- The cost of performing a compacting collection is a function of the amount of live data on the heap. If only 1% of data is live, only 1% of data needs to be compacted (copied in memory).

- By contrast, in a non-compacting collector objects that are no longer reachable still imply book keeping overhead as their memory locations must be kept track of as being freed, to be used in future allocations.

- In a compacting collector, allocation is usually done via a bump-the-pointer approach. You have some region of space, and maintain your current allocation pointer. If you allocate an object of n bytes, you simply bump that pointer by n (I am eliding complications like multi-threading and optimizations that implies).

- In a non-compacting collector, allocation involves finding where to allocate using some mechanism that is dependent on the exact mechanism used to track the availability of free memory. In order to satisfy an allocation of n bytes, a contiguous region of n bytes free space must be found. If one cannot be found (because the heap is fragmented, meaning it consists of a mixed bag of free and allocated space), the allocation will fail.

Real-world analogy: Consider your room again. Suppose you are a compacting collector. You can move things around on the floor freely at your leisure. When you need to make room for that big sofa in the middle of the floor, you move other things around to free up an appropriately sized chunk of space for the sofa. On the other hand, if you are a non-compacting collector, everything on the floor is nailed to it, and cannot be moved. A large sofa might not fit, despite the fact that you have plenty of floor space available – there is just no single space large enough to fit the sofa.

Generational garbage collection

Most real-world applications tend to perform a lot allocation of short-lived objects (in other words, objects that are allocated, used for a brief period, and then no longer referenced). A generational garbage collector attempts to exploit this observation in order to be more CPU efficient (in other words, have higher throughput). (More formally, the hypothesis that most applications have this behavior is known as the weak generational hypothesis.)

It is called “generational” because objects are divided up into generations. The details will vary between collectors, but a reasonable approximation at this point is to say that objects are divided into two generations:

- The young generation is where objects are initially allocated. In other words, all objects start off being in the young generation.

- The old generation is where objects “graduate” to when they have spent some time in the young generation.

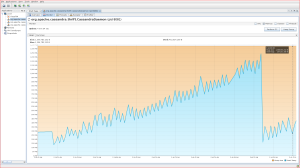

The reason why generational collectors are typically more efficient, is that they collect the young generation separately from the old generation. Typical behavior of an application in steady state doing allocation, is frequent short pauses as the young generation is being collected – punctuated by infrequent but longer pauses as the old generation fills up and triggers a full collection of the entire heap (old and new). If you look at a heap usage graph of a typical application, it will look similar to this:

The ongoing sawtooth look is a result of young generation garbage collections. The large dip towards the end is when the old generation became full and the JVM did a complete collection of the entire heap. The amount of heap usage at the end of that dip is a reasonable approximation of the actual live set at that point in time. (Note: This is a graph from running a stress test against a Cassandra instance configured to use the default JVM throughput collector; it does not reflect out-of-the-box behavior of Cassandra.)

Note that simply picking the “current heap usage” at an arbitrary point in time on that graph will not give you an idea of the memory usage of the application. I cannot stress that point enough. What is typically considered the memory “usage” is the live set, not the heap usage at any particular time. The heap usage is much more a function of the implementation details of the garbage collector; the only effect on heap usage from the memory usage of the application is that it provides a lower bound on the heap usage.

Now, back to why generational collectors are typically more efficient.

Suppose our hypothetical application is such that 90% of all objects die young; in other words, they never survive long enough to be promoted to the old generation. Further, suppose that our collection of the young generation is compacting (see previous sections) in nature. The cost of collecting the young generation is now roughly that of tracing and copying 10% of the objects it contains. The cost associated with the remaining 90% was quite small. Collection of the young generation happens when it becomes full, and is a stop-the-world pause.

The 10% of objects that survived may be promoted to the old generation immediately, or they may survive for another round or two in young generation (depending on various factors). The important overall behavior to understand however, is that objects start off in the young generation, and are promoted to the old generation as a result of surviving in the young generation.

(Astute readers may have noticed that collecting the young generation completely separately is not possible – what if an object in the old generation has a reference to an object in the new generation? This is indeed something a garbage collector must deal with; a future post will talk about this.)

The optimization is quite dependent on the size of the young generation. If the size is too large, it may be so large that the pause times associated with collecting it is a noticeable problem. If the size is too small, it may be that even objects that die young do not die quite quickly enough to still be in the young generation when they die. Recall that the young generation is collected when it becomes full; this means that the smaller it is, the more often it will be collected. Further recall that when objects survive the young generation, they get promoted to the old generation. If most objects, despite dying young, never have a chance to die in the young generation because it is too small – they will get promoted to the old generation and the optimization that the generational garbage collector is trying to make will fail, and you will take the full cost of collecting the object later on in the old generation (plus the up-front cost of having copied it from the young generation).

Parallel collection

The point of having a generational collector is to optimize for throughput; in other words, the total amount of work the application gets to do in a particular amount of time. As a side-effect, most of the pauses incurred due to garbage collection also become shorter. However, no attempt is made to eliminate the periodic full collections which will imply a pause time of whatever is necessary to complete a full collection.

The throughput collector does do one thing which is worth mentioning in order to mitigate this: It is parallel, meaning it uses multiple CPU cores simultaneously to speed up garbage collection. This does lead to shorter pause times, but there is a limit to how far you can go – even in an unrealistic perfect situation of a linear speed-up (meaning, double CPU count -> half collection time) you are limited by the number of CPU cores on your system. If you are collecting a 30 GB heap, that is going to take some significant time even if you do so with 16 parallel threads.

In garbage collection parlance, the word parallel is used to refer to a collector that does work on multiple CPU cores at the same time.

Incremental collection

Incremental in a garbage collection context refers to dividing up the work that needs to be done in smaller chunks, often with the aim of pausing the applications for multiple brief periods instead of a single long pause. The behavior of the generational collector described above is partially incremental in the sense that the young generation collectors constitute incremental work. However, as a whole, the collection process is not incremental because of the full heap collections incurred when the old generation becomes full.

Other forms of incremental collections are possible; for example, a collector can do a tiny bit of garbage collection work for every allocation performed by the application. The concept is not tied to a particular implementation strategy.

Concurrent collection

Concurrent in a garbage collection context refers to performing garbage collection work concurrently with the application (mutator). For example, on an 8 core system, a garbage collector might keep two background threads that do garbage collection work while the application is running. This allows significant amounts of work to be done without incurring an application pause, usually at some cost of throughput and implementation complexity (for the garbage collector implementor).

Available Hotspot garbage collectors

The default choice of garbage collector in Hotspot is the throughput collector, which is a generational, parallel, compacting collector. It is entirely optimized for throughput; total amount of work achieved by the application in a given time period.

The traditional alternative for situations where latency/pause times are a concern, is the CMS collector. CMS stands for Concurrent Mark & Sweep and refers to the mechanism used by the collector. The purpose of the collector is to minimize or even eliminate long stop-the-world pauses, limiting garbage collection work to shorter stop-the-world (often parallel) pauses, in combination with longer work performed concurrently with the application. An important property of the CMS collector is that it is not compacting, and thus suffers from fragmentation concerns (more on this in a later blog post).

As of later versions of JDK 1.6 and JDK 1.7, there is a new garbage collector available which is called G1 (which stands for Garbage First). It’s aim, like the CMS collector, is to try to mitigate or eliminate the need for long stop-the-world pauses and it does most of it’s work in parallel in short stop-the-world incremental pauses, with some work also being done concurrently with the application. Contrary to CMS, G1 is a compacting collector and does not suffer from fragmentation concerns – but has other trade-offs instead (again, more on this in a later blog post).

Observing garbage collector behavior

I encourage readers to experiment with the behavior of the garbage collector. Use jconsole (comes with the JDK) or VisualVM (which produced the graph earlier on in this post) to visualize behavior on a running JVM. But, in particular, start getting familiar with garbage collection log output, by running your JVM with (updated with jbellis’ feedback – thanks!):

-XX:+PrintGC-XX:+PrintGCDetails-XX:+PrintGCDateStamps-XX:+PrintGCApplicationStoppedTime-XX:+PrintPromotionFailure

Also useful but verbose (meaning explained in later posts):

-XX:+PrintHeapAtGC-XX:+PrintTenuringDistribution-XX:PrintFLSStatistics=1

The output is pretty easy to read for the throughput collector. For CMS and G1, the output is more opaque to analysis without an introduction. I hope to cover this in a later update.

In the mean time, the take-away is that those options above are probably the first things you want to use whenever you suspect that you have a GC related problem. It is almost always the first thing I tell people when they start to hypothesize GC issues; have you looked at GC logs? If you have not, you are probably wasting your time speculating about GC.

Conclusion

I have tried to produce a crash-course introduction that I hope was enlightening in and of itself, but is primarily intended as background for later posts. I welcome any feedback, particularly if things are unclear or if I am making too many assumptions. I want this series to be approachable by a broad audience as I said in the beginning, though I certainly do assume some level of expertise. But intimate garbage collection knowledge should not be required. If it is, I have failed – please let me know.

Here are the options we suggest for troubleshooting Cassandra GC:

-XX:+PrintGCDetails

-XX:+PrintGCDateStamps

-XX:+PrintHeapAtGC

-XX:+PrintTenuringDistribution

-XX:+PrintGCApplicationStoppedTime

-XX:+PrintPromotionFailure

-XX:PrintFLSStatistics=1

It’s surprisingly difficult to find actual, accurate information on these. Some of them I only learned about thanks to the recent “Java Performance” book. In particular, I’d note that PrintGCTimeStamps (which prints time elapsed since the process started) is much less useful than PrintGCDateStamps (which prints wall clock time and date); stick with the latter. Also, PrintApplicationPauseTime doesn’t actually exist. (I’m sure you meant PrintGCApplicationStoppedTime. 🙂

Thanks! Edited to reflect that. And I removed the suggestion for -XX:+PrintGCTimeStamps. I agree that in comparison it’s not useful to the average person.

Is it possible (in theory or practice) to run one altogether in old gen and one in young?

You could, but there is a cost associated to collecting individual generations (I will go into that later; this is what I was hinting at skipping when I talked about the young gen being collected separately). All the garbage collectors in Hotspot only collect young gen separately; when old-gen is collected they might as well collect everything because the added cost that would be implied by supporting collection of old gen without collecting new gen is prohibitive. In short, it has to do with keeping track of references between regions.

The better of the available programming languages do not need GC.

The obvious ones are asm, C etc. but even LISPs don’t really need a GC.

See here for an example: http://www.newlisp.org/MemoryManagement.html

That has nothing to do with being a better language, GC is just a particular form of memory management. It definitely has its place for reducing complexity in large applications.

Did you actually read through that article you linked? The author seems to have completely missed one of the main points of a GC, being to monitor lifetime of recursive/cyclic data structures, a hard problem to solve reliably with manual memory management. The author works around that problem by simply not allowing cyclic data structures, which seems a step backwards to me. If you assume, as he does, that the compiler can track all references then you indeed don’t need a GC because object lifetime becomes a trivial problem.

Sorry if that sounds harsh, just was a bit disappointed by that article.

Great job, but seems similar to Sun’s memory management white paper. Looking forward to part 2. 🙂

Great article. I’m a third year CS student, and this is the first good explanation of Garbage Collecting that I have read, aside from discussions of reference counting in Apple docs. Will you expand on the problems involved with reference counting in later posts?

Looking forward to part 2.

Maybe, I wasn’t necessarily planning to. But the gist of it is that while reference counting can indeed be practical, it is not “obviously” the better option in part because (1) the cost of doing counter increments can actually be quite high, especially if the implementation does it on every pointer write and performs no optimization, and (2) the cost is further prohibitive in a multi-threaded environment as you need to maintain a consistent global counter. The naive way to do this would require a memory barrier/fence for each counter manipulation, which is *very* expensive. There are ways around it potentially, such as maintaining per-thread counts and having the globally consistent counter only count the number of threads referencing the object. But it quickly goes non-trivial in terms of implementation.

Add to this that you still have to solve the problem of cyclic data structures.

In addition, a typical compacting collector has the ability to avoid fragmentation. This is possible in ref counting too, but a lot of the work involved in a compacting collector overlaps with the work you need to do in order to move objects around. (I wonder if anyone has implemented a refcounting scheme that is also copying/compacting…)

Hi, nice overview. You may already be planning to discuss this in a future installment, but the interaction between garbage collectors and virtual memory systems is also of immense importance. I think this is a good starting point for exploring the literature on the topic:

http://lambda-the-ultimate.org/node/2391

Great introduction to the JVM garbage collector. Thanks for taking the time. Looking forward to the next one.

i dont pretend to understand every implication of what you are writing about, but as a non coder (and i suppose a vicarious geek wannabe) i find this post fascinating (and very understandable)… good reading for anyone who has ever wondered when the program they’re using pauses–WTF is it DOING? I look forward to reading more

To very broadly generalize, the pauses you are talking about are due to I/O (waiting on disk or network). GC pauses at the level talked about here are more server-level concerns – not things you will run into in your day-to-day running of apps on your laptop …

Yes. Reagan, to put things into perspective: On a modern desktop system, even with a heap size of a gigabyte or so, a full GC should hopefully be a matter of a few hundred milliseconds or less assuming there is no other problem present (such as the process being swapped out to disk). So unless you are using software with a very large heap, any time you spend actively waiting (to the extent that you perceive it as waiting) is more likely due to disk or network I/O as jhart indicates.

(This is why additional memory and/or an SSD disk will often be hugely more “worth” the extra money than a a 20% faster CPU for typical desktop situations.)

Excellent post. Thank you very much for the explanation. As a computer science student it helps a lot to hear things explained so simply.

Looking forward to future posts int he series.

great post! Looking forward for the series..

Good stuff. This video, “Everything I Ever Learned about JVM Performance Tuning @twitter”

http://www.infoq.com/presentations/JVM-Performance-Tuning-twitter

has very clear advice on tuning garbage collection and analysing memory use under the HotSpot JVM. For anyone who is interested, I recommend it as a follow-on to this post.

I have this curious question about the working memory set along with Java GC. Given a program running on explicit memory management, the active working memory set is often associated with which part of the application access, the more areas that is accessed, the larger the set.

Now for Java, because of the automatic memory management along with GC, it becomes even if the application is idle, GC still functions, accessing memory performing tracing and so forth that is relevant to the GC process.

Will anyone here agree that such activities is counter productive to VMM where idle pages can be paged out to the disk for other active processes in a memory constraint situation ?

For long standing java application that occupies a large amount of memory, the working set will be large even if the application is waiting for input in an idle state and have a large data structure in the memory.

Generally if the program is written in C, the active working set will be small, but Java will be large because periodically the GC will be accessing live objects.

Is my understanding incorrect, or the implementation of JVM is coping with this using some techniques ? Please kindly advice.

Thanks

Someone linked to the bookmarking collector that attempts to mitigate this.

My personal answer in general though is that in real life in a server-side environment you don’t want paging at all to begin with, so the point is pretty moot. Unless someone knows what they are doing, I recommend running with swap turned completely off (or else the relevant processes mlockall():ed) in a production server side environment anyway. Paging to platters is just too slow; even if you’re a C/C++ application without GC, sudden paging is rarely going to be acceptable anyway. If you have use-cases that truly do not fit in memory in a production latency sensitive situation, I tend to be of the opinion that it is probably better the explicitly manage this rather than have the operating system page you in and out – unless your problem domain happens to fit *very* well into the paging abstraction of the OS.

For desktop use it might be more of an issue IMO, since there you might legitimately have an actual working set you as a human is triggering, and you may feel it’s okay to sometimes wait for page-ins. It also seems to be the case that desktop apps have a tendency to leak unused memory over time (probably because they “can”). I don’t know. It’s been years since I “relied” on swap on a desktop personally.

In any case, it is certainly the case that if you do have an application that gets paged out by the OS, with the current JVM the results are worse than with your typical C/C++ application. It’s not just the mere fact that the JVM will touch the entire heap (usually, more or less) during marking, but it’s also that almost by definition the “LRU:ish” approximation of the kernel doesn’t fit the behavior of a garbage collected system. You’ll tend to swap out pages not touched in a long while; which is going to tend to be data in the old-generation (assuming it’s not all touched by the application). But this only makes sense for periods where GC is not happening; as soon as the GC decides to do a full collection of old-gen you get a flurry of paging activity. In addition, promotions from young gen to old gen can also cause paging since you’re indeed moving objects into old-gen. A process which is “supposed” to be fast (from the GC perspective) and done in a stop-the-world pause, so you really do not want paging going on.

I dunno. Maybe with really fast SSD:s, paging is being re-born as a relevant issue. With platters though, my opinion is that it is mostly a relic by now. if you care at all about performance, even in a desktop situation. That opinion is bound to be controversial however and I do not claim that it represents consensus (i.e., my personal opinion only).

You are correct that a full garbage-collection pass will artificially inflate the working-set of pages the JVM is using, beyond what the application actually requires, but the JVM (and most advanced garbage collectors) takes steps to ensure that these full garbage-collection passes are required infrequently. Furthermore, GC is often only triggered when there isn’t enough memory to satisfy a particular allocation request; if you run it any more often than that, you’re spending time doing GC that you’d rather use to run the program itself.

For your specific example, a program waiting for input in an idle state, GC won’t be running since there isn’t any need for it. Thus, the working-set of the JVM with respect to virtual memory won’t be an issue.

One of the big wins of generational garbage collection over a simple mark-and-sweep collector is that it reduces the number of full mark-and-sweep garbage collection passes required. The area of memory used for young objects tends to be smaller, and is accessed in a way that is friendlier to the virtual memory system, so if a quick stop-and-copy pass through this memory area frees up enough memory to satisfy the allocation request, you’ve avoided the expensive mark-and-sweep pass that will bring a lot of virtual pages into physical memory.

Nonetheless, it is still a big limitation of virtually all production garbage collectors, AFAIK – this interaction between virtual memory systems and the collector itself. There are two general approaches to resolving this issue, which you can read about at the link I posted in an earlier comment. At this point it is evidently still not enough of an issue to see a concerted effort on resolving it, so virtual-memory-friendly garbage collectors are still relegated to the world of research. But, it’s something I would expect to change in the next 5-10 years.

Superb Post. It was only yesterday when I was desperately browsing around looking for a good tutorial on how Java GC works, and the very next day I see this on my FB page via CodeChef. Amazing Coincidence!

Anyways, could you please point me to a more implementation level material on this, (only in the case you don’t plan to cover that yourself). I mean I want to see the actual code which performs GC, which I can play around with. I am not sure if its entirely compiler level stuff ( I haven’t taken a compiler class yet), or is it possible to plug in one’s own versions of GC in a hotspot.

If you want to get down to details, I can recommend three papers to read (I was going to point to these later on anyway).

First off, to get some good background and abstraction, read “Uniprocessor Garbage Collection Techniques” at ftp://ftp.cs.utexas.edu/pub/garbage/gcsurvey.ps

You may then be interested in the CMS paper which I’m not able to find right now (i’ll try harder later on).

Then there is the G1 paper at: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.63.6386&rep=rep1&type=pdf

In terms of implementation, I recommend downloading the source code for OpenJDK. You’ll find the GCs in hotspot/src/share/vm/gc_implementation

And yes, the code base seems to be such that plugging in your own GC is doable. I am not personally versed enough in the implementation to estimate the effort involved in doing so from scratch, but there is certainly some abstractions in place to do it – look at the pre-existing ones.

“Reference counting will not detect as unreachable cyclic data structures, and has some other problems that cause it to not be the end-all be-all garbage collection choice.”

This is not 100% correct. There are reference counting garbage collector, which can handle cyclic data structures. Adobe Flash Player (Tamarin VM) is an example.

Can you please provide a reference or an explanation of what they actually do? Quite clearly they do something specific which is beyond naive reference counting, or they could not possible handle cyclic data structures.

Note that cPython is an example of a runtime that uses reference counting, and can deal with cyclic data structures. However, it does the latter by falling back to a non-reference counting method. So the mere observation that a particular runtime both uses reference counting and deals with cyclic references does not invalidate the claim.

Whatever a garbage collector is doing, they are going to have to do something beyond basic reference counting in order to handle cyclic references. My main point was that the alluring simplicity and seeming obviousness of refcounting as a solution to GC, has drawbacks.

I Googled some and didn’t find any specific on the Tamarin GC (honestly, Googling for technically deep articles having to do with Flash is often difficult).

Tamarin VM: http://www-archive.mozilla.org/projects/tamarin/

Current development code is available via Mercurial at http://hg.mozilla.org/tamarin-redux

There is a lot of docs in doc\mmgc.

A citation: “Memory management in MMgc is incremental in two respects. Reference

counting is used extensively and provides for prompt deallocation

without running the marking garbage collector. The marking collector

tends to be expensive, so when it does run program work is interleaved

with mark work in order to limit user-visible collection pauses.”

Tamarin’s GC is not a naive reference counting with strong and weak pointer, although weak references are presented in the AS3 SDK itself (in the class Dictionary). Actually, I’ll need to re-read all this stuff because it got updated since I read it last time.

Thanks! So it sounds like it’s doing the same thing; uses ref counting with fallback to a tracing collector.

Basically, yes. Reference counting allows to release memory almost immediately. They also use other tricks, something like “delayed” or “postponed” reference counting.

A CLR team tried to use reference counting in their early prototype. But they abandoned this idea because of performance reason. This prototype should be available on internet.

A few years ago I found some papers about how to release cycles in a reference counted environment. It involved temporarily weakening the reference counters, if only weak counts remain a cycle has been found. (Something along this line, I don’t remember the details.)

On a quick google search I couldn’t find that technique anymore, it seems nowadays everyone switched to concurrent or GC techniques for cycle detection.

That actually makes sense, because whatever you do, to detect cycles (in a generic way) you need to traverse the object graph. GCs need to do the same so there’s bound to be some overlapping in the algorithms. That doesn’t mean, though, that reference counted systems need to fall back to general purpose GCs, you can probably optimize some things when you already know you’re reference counting. I’m thinking of stuff like the write barriers.

“I’m thinking of stuff like the write barriers.” 🙂

Check Tamarin’s source code. 🙂

Wikipedia article http://en.wikipedia.org/wiki/Reference_counting covers GC aspects of reference counting pretty good.

Thanks, will be very handy.

There will be at time when programming languages are smart enough to do efficient garbage collection on their own, without us writing code to do so.

Excellent post. GC never seems to fail in drawing my attention. 🙂

Can you share the implications of different types of hardware or CPU would have on GC process? or is it not very significant ?

In terms of real-life garbage collectors today on hardware people are likely to have, I would say that the number of CPU cores is going to be the most important aspect to be aware for tuning purposes.

Specific hardware features can make a huge difference though; the Azul GC is a good example of this when running on their Vega processors. I’d provide a link but all their material seems hidden behind submit-who-you-are forms.

The Azul C4 collector now runs on stock x86 hardware, and on Linux. You can find some details about C4 in our peer-reviewed ISMM paper (no registration required): http://www.managedruntime.org/webfm_send/4

Excellent post, Peter, with understandable and concise coverage background on GC mechanisms.

For those of you who have the patience to sit through an hour+ overview of these and other basic GC mechanisms, as well as a classification of the various available JVM collectors, you can find my SpringOne talk on the subject on InfoQ, at: http://www.infoq.com/presentations/Understanding-Java-Garbage-Collection.

It’s also worth mentioning the Azul’s JVM, now natively available on Linux x86, provides a concurrent compacting collector for both the old AND young generations, making pauses at any heap size a thing of the past.

Thank you!

I am, as I am sure others are, keenly interested in getting to take your GC out for a spin. Based on the theory I am very excited about it, but nothing beats hands-on experience. Are there plans to make it easier for mere mortals (i.e., individuals) to get to try it out? (I recently submitted a request for evaluation.)

I don’t rely on platforms/langauages that has GC built-in by default.

Why? Its complex, heuristically optimized, unpredictable, has many tuning knobs, is memory wasting and pauses all your threads during GC.

And most important: managing memory yourself improves the design of your program. The time spent to think about mem management is well worth the effort and pays off.

In non GC-ed languages like C/C++ segfaults may happen. But that’s actually good! There is almost no better prove that your programs contains a bug. The same bug in a GC-ed lang would lead to memory leaks and makes it much harder to discover the bug – your customer will probably unhappily have to do it…

Programming is managing resources, open/close, acquire/release, construct/destruct. Managing a valuable resource like memory should not be left to a generic algorithm. Furthermore the RAII concept helps to manage the resources and supersedes additional concepts like dispose() and if(disposing) as in C#.

Academically I tend to incline towards your opinions. However I’m not sure if you notice, but not all programmers are top-notch skillful. If you are one, I am certain your boss will appreciate your service a lot. Unfortunately for business to grow, the decision has to live with facts. The fact is there are developers that are very skillful and developers that are producing good works but not at the level to fully produce beautiful and error-free codes. Choosing between 20% lost in performance which can be easily top up with better machines with faster CPU and more memory, the business decision will be “Are you able to live with a less efficient platform that gives you 99% segmentation fault free applications, or are you able to live with 200% performance gain but 20% possible segmentation fault”

Even SIT and UAT can’t sieve out all possible scenarios where segfaults occurs, and if it happens on Xmas Eve…. I feel so sad for the team in charge.

Hence GC has its advantage and the way I look at peers around, I say they are there to stay. So rather than searching for performance gain that we already knew will means you get less developers of that kind of skill set and the cost will be transferred to training and hiring, it might not be exactly a bad choice to transfer the cost to buying better machines and more memory which is more readily available 🙂

I will go further and disagree entirely. The notion that GC is unpredictable while explicit memory management is not is false (not only theoretically; I’ve had malloc() pause for seconds at a time in a production system, effectively pausing all threads). It is true however that the tendency of GC is to be more consistently unpredictable.

I also reject the notion that smart people don’t need GC. I think that’s a very dangerous frame of mind. No matter how smart you think you are, you have limits. Automatic memory management allows you to structure code in ways that you otherwise could not; it makes certain specific problems *much* easier, and makes a lot of things a bit easier – and yes it makes some things harder.

Further, saying that you don’t want to use languages with GC “built-in” is really the opposite of what you want to do. If you *are* going to rely on a garbage collector, it is likely that a language with it built in will have the opportunity to have a better GC than one slapped on top of it after the fact. For example, yes you can put a GC on top of C (witness boehm-gc for example). But there are trade-offs when the runtime environment and/or compiler is not co-operating with you. In the boehm-gc case, it is conservative (rather than precise) because there is no way to determine accurately what constitutes a pointer. It is also limited in other ways, such as the inability to emit read and/or write barriers in code (IIRC it does support utilizing memory mappings to offer a somewhat more incremental mode, but its hands are still tied behind its back).

I also reject entirely the notion that having segfaults is a good thing because it “proves you have a bug”. For starters, whether or not you are segfaulting or getting a null pointer exception, both prove that you have a bug. If you are accidentally retaining pointers, that translates into a memory leak in a GC:ed world – also proving you have a bug (and contrary to the claim, significant memory leaks are typically not difficult to show by simple empirical testing). Further more, an actual segmentation fault proving you have a bug is not very useful compared to the fact that you may have, for example, arbitrary heap corruption due to bugs in your program that do *not* trigger segmentation faults. In other words, sure – a segfault proves you have a bug. But the *lack* of a segfault does *not* prove that you do *not* have a bug, which is the more interesting property.

Further, having a garbage collector is not mutually exclusive with segfaults. For example, in the C + boehm-gc case you still have the same fundamental memory model despite operating with a GC. The GC only removes one very specific reason for a segfault: A dangling pointer leads to a leak instead of an invalid de-ref or arbitrary corruption.

What is the bigger advantage than GC that relates to this is the managed memory model that is enabled by, in part, garbage collection. Arbitrary corruption has the problem completely unrelated pieces of code can affect each other in ways that has no relationship to the problem domain. Consider a function that implements SHA1 for example. In a managed environment, I can typically (depending on how dynamic the language is otherwise) satisfy myself trivially that if the code does have a bug, it is limited to producing an incorrect SHA1 result. I need not worry about a subtle bug in some pointer arithmetic somewhere causing an arbitrary execution bug. I can rest assured of the separation of code and data (from the point of view of bugs in my code; obviously the environment can have bugs). Essentially, it helps keep bugs inside the problem domain, by limiting the amount of ways you can accidentally break out of your abstraction (the memory model in this case).

This is why I hate the fact that in order to e.g. add SSL to a postfix installation, I need to switch on a huge code base of complex native code with a history of security vulnerabilities, leading to a useless trade-off between one type of security (protecting against arbitrary execution attacks) and another type (protecting the data) – even though I’m not Google or any other huge provider and even *if* the managed code would have been 10x slower, it would have been completely irrelevant to me from a deployment perspective.

Overall, there are trade-offs involved in choice of memory management, and neither automatic nor explicit is going to always be the best option. Subtle details will also matter; for example you could easily imagine an explicit memory management interface that still did not expose naked pointers and allowed data to move around; the traditional malloc/free forces you to never move objects around – meaning you cannot compact, and meaning you will have to live with fragmentation concerns.

Ideologically opposing automatic memory management, and excusing it by implications of inferiority of people who “need” GC, is in my opinion mis-guided, and I would never want people to limit themselves to tools (languages) without automatic memory management when solving problems. Nor would I want them to limit themselves to tools that *do* have automatic memory management.

@Peter Schuller:

If performance does not matter then why not use GC-ed language if you can’t resist? But then you don’t want to care about the internals of the GC. If performance is critical then consider a non-GCed language and think about best mem management.This approach is more deterministic than trying to tune a GC. Take also into account that every successful SW finally becomes performance critical or might be used on a mobile.

Carefully selecting the right libraries has nothing to do with GC.

No, you won’t have a null pointer exception because the reference is still valid and GC does not take place. Stale data and memory leaks will be the effects.

We should reason only about correct programs. It does not make sense to say language X behaves bad if it contains bugs.

Many think that GC-ed languages are cool because one does not need think where “delete object;” needs to be called. But this is a fallacy. You still have to decide where to set “reference = null;” or to call a cleanup method at the right position or use a soft reference.

Fragmentation: In C++ you can easily switch to another mem mgmt if fragmentation becomes a problem. [In Java] CMS doesn’t compact, so it’s prone to fragmentation, which will lead to a stop-the-world pause. (From Attila Szegedi’s “Everything I ever learned about JVM”)

GC-ed languages have this distinction between reference and value types. This was needed because if also the primitive types would have to be GC-ed you could go to bed at 6 p.m. Reference types introduce a of memory overhead.

@DKSG: I don’t agree with your “faster CPU and more mem” argument because you also have to take mobile devices into account. What a nice feature if your program makes also good shape on your mobile.

@Gil Tene: I never had problems the API contract. If the docu is not clear about this I don’t use the lib.

The much overlooked and overwhelming benefit of Automatic Memory Management that is built-in to an environment is what it does to the ecosystem of leverage-able libraries and frameworks that evolve.

Without automatic memory management, there is always some sort of “memory management contract” involved in passing anything but the simplest of data structures around: If I allocate an object, and pass it to a library through an API, who frees it? Does the allocator free? Does the receiver free? Do both hold reference counts with the last released refcount freeing? Have we agreed on how we handle cyclic structures? Lists? Graphs? Dictionaries of hashtables of linked lists of sets? Can I pass you one? Are you allowed to store mine in one?

Since there is no “right” contract that is standard (when GC/AMM is not a mandatory part of the environment), leveraging unrelated libraries and frameworks becomes “hard”. Without GC or a standardized memory management contract, objects made in one library cannot safely be used by another (unless they both happen to follow the same contract).

The best evidence for this theory actually translating into practice is the sheer mass of leverageable software available in the Java ecosystem, compared to what is leverageable in other environments that have been around much longer .

I envy those of you who have enough time to write everything yourselves, and not leverage any code that doesn’t follow your chosen memory management contract and discipline. The rest of us are lucky to have GC around.

Now if only someone could figure out how to take away the runtime annoyances of actually having it around… ;).

I liked the article as a overview of the JVM collector types. The additional GC log settings are good to see too. A reference to the visualvm gc plugin would be good. It requires running jstatsd and gives a lot of graphical insight into how your collectors are functioning; Oracle covers this in their intro tutorial [1]. Also, Jon Masamitsu’s blog [2] is a fantastic resource for all things JVM GC. I really recommend looking at that after this blog to get a lot deeper into what the JVM is doing during GC.

[1] http://www.oracle.com/webfolder/technetwork/tutorials/obe/java/gc01/index.html

[2] https://blogs.oracle.com/jonthecollector/